Q1 under wraps

Three months of full time game dev complete. At this point, some indie developers might have a game to show. Not I!

Month 1: Draw something to the screen. Slip & stumble over Rust

Month 2: Draw a bit more. Light things up. Simple asset pipeline

Month 3: Multithreading, networking, databases and architecture. Rust is 50% less scary

Most of the technical pieces I needed to confront are understood. Nothing compels me away from continuing to pursue this game. Rust is not as mature as I'd like, at least community libraries. Documentation is sub-par and my overall development speed is still 20% that of C#/Typescript. Realistically speaking, the best I could hope for was being at a place where things are moving forward. I'm happy with where things reside.

Architecture is becoming more important to figure out. In Rust, it's common to have multiple "crates" - aka projects, in separate folders within a single github repo. They call it a workspace. Not exactly a clean micro-service approach but very convenient in writing a large project relying on multiple local libraries. A single repo for this game is probably fine.

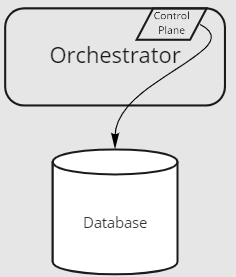

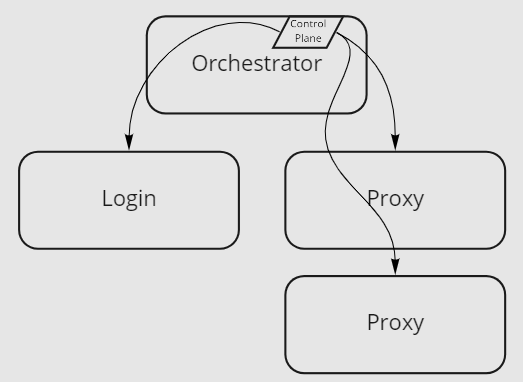

I'm taking a multiplayer-first approach to architecture. The client "node" will draw things to the screen, accept user-input and present existing worlds/realms for the player to log into. Assuming a local single player session, the player will launch an "orchestrator" node which manages the "server" experience.

Something like this could scale within a cloud environment by adding additional hardware when the world grows and many players connect. The control plane lets the orchestrator understand if it needs to literally spin up a physical server or vm (within a cloud environment) or thread within a client process (on local machines).

How is the world "partitioned" so that multiple game servers operate cooperatively within that single-world experience? For a future blog post I suppose.

Comments

Post a Comment

Please be kind :)